Home

Here Be Dragons

Beware, here be dragons! This is very very unstable right now, and probably will be until early Jan.

Introduction¶

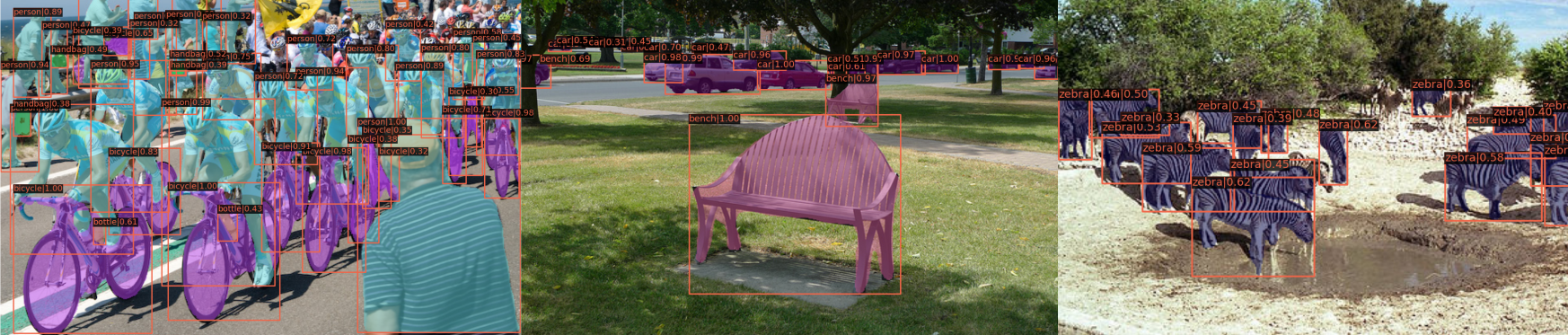

VisDet is an open source object detection toolbox based on PyTorch. This project is a fork of the original MMDetection project, providing an enhanced and modernized detection framework for research and production use.

The master branch works with PyTorch 1.5+.

Major features

- **Modular Design** We decompose the detection framework into different components and one can easily construct a customized object detection framework by combining different modules. - **Support of multiple frameworks out of box** The toolbox directly supports popular and contemporary detection frameworks, *e.g.* Faster RCNN, Mask RCNN, RetinaNet, etc. - **High efficiency** All basic bbox and mask operations run on GPUs. The training speed is faster than or comparable to other codebases, including [Detectron2](https://github.com/facebookresearch/detectron2). - **State of the art** Built on a codebase originally developed by the MMDet team (COCO Detection Challenge winners in 2018), this fork continues pushing the boundaries forward with modern improvements and enhancements.Roadmap¶

See our Roadmap for planned features, including: - SPDL Integration: Thread-based data loading for 74% faster training - Kornia: GPU-accelerated augmentations - Python 3.13t: Free-threaded Python support

What's New¶

For the latest updates and improvements to VisDet, please refer to the changelog.

Installation¶

Please refer to Installation for installation instructions.

Getting Started¶

Please see the Getting Started guide for the basic usage of VisDet. Available tutorials:

- learn about configs

- customize datasets

- customize data pipelines

- customize models

- customize runtime settings

- customize losses

- finetuning models

- export a model to ONNX

- export ONNX to TRT

- weight initialization

- how to xxx

Overview of Benchmark and Model Zoo¶

Results and models are available in the model zoo.

| Object Detection | Instance Segmentation | Panoptic Segmentation | Other |

|

|

|

|

| Backbones | Necks | Loss | Common |

|

|

|

|

See the model zoo for a complete list of supported methods.

FAQ¶

Please refer to the documentation for frequently asked questions.

Contributing¶

We appreciate all contributions to improve VisDet. Welcome community users to participate in development. Please refer to the contributing guide for the contributing guideline.

Acknowledgement¶

VisDet is a fork of the original MMDetection project. We acknowledge the original MMDetection team and the broader open source community for their contributions to the detection research field. We appreciate all the contributors who implement their methods or add new features, as well as users who give valuable feedbacks.

We wish that the toolbox and benchmark could serve the growing research community by providing a flexible toolkit to reimplement existing methods and develop their own new detectors.

Citation¶

If you use this toolbox or benchmark in your research, please cite this project.

@article{mmdetection,

title = {{MMDetection}: Open MMLab Detection Toolbox and Benchmark},

author = {Chen, Kai and Wang, Jiaqi and Pang, Jiangmiao and Cao, Yuhang and

Xiong, Yu and Li, Xiaoxiao and Sun, Shuyang and Feng, Wansen and

Liu, Ziwei and Xu, Jiarui and Zhang, Zheng and Cheng, Dazhi and

Zhu, Chenchen and Cheng, Tianheng and Zhao, Qijie and Li, Buyu and

Lu, Xin and Zhu, Rui and Wu, Yue and Dai, Jifeng and Wang, Jingdong

and Shi, Jianping and Ouyang, Wanli and Loy, Chen Change and Lin, Dahua},

journal= {arXiv preprint arXiv:1906.07155},

year={2019}

}

License¶

This project is released under the Apache 2.0 license.